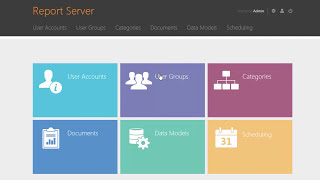

Authors: Marin, Dmitrii ; Chang, Jen-Hao Rick*; Ranjan, Anurag; Prabhu, Anish; Rastegari, Mohammad; Tuzel, Oncel Description: Pooling is commonly used to improve the computation-accuracy trade-off of convolutional networks. By aggregating neighboring feature values on the image grid, pooling layers downsample feature maps while maintaining accuracy. In transformers, however, tokens are processed individually and do not necessarily lie on regular grids. Utilizing pooling methods designed for image grids (e.g., average pooling) can thus be sub-optimal for transformers, as shown by our experiments. In this paper, we propose Token Pooling to downsample tokens in vision transformers. We take a new perspective --- instead of assuming tokens form a regular grid, we treat them as discrete (and irregular) samples of a continuous signal. Given a target number of tokens, Token Pooling finds the set of tokens that best approximates the underlying continuous signal. We rigorously evaluate the proposed method on the standard transformer architecture (ViT/DeiT), and our experiments show that Token Pooling significantly improves the computation-accuracy trade-off without any further modifications to the architecture. On ImageNet-1k, Token Pooling enables DeiT-Ti to achieve the same top-1 accuracy while using 42% fewer computations.

![Гелертер верят - Развитая цивилизация существовала до появления людей? [Времени не существует]](https://s2.save4k.org/pic/pMxzC99_ZkE/mqdefault.jpg)