As deep networks are increasingly deployed in memory-constrained and throughput-critical systems, there is a need to create AI models that can maintain accuracy – and, as a result, trust – while also consuming fewer resources. Researchers at IBM’s Almaden Research Laboratory have reached a new milestone in AI precision and developed an algorithm that matches the inference accuracy of a 32-bit network while using only three bits.

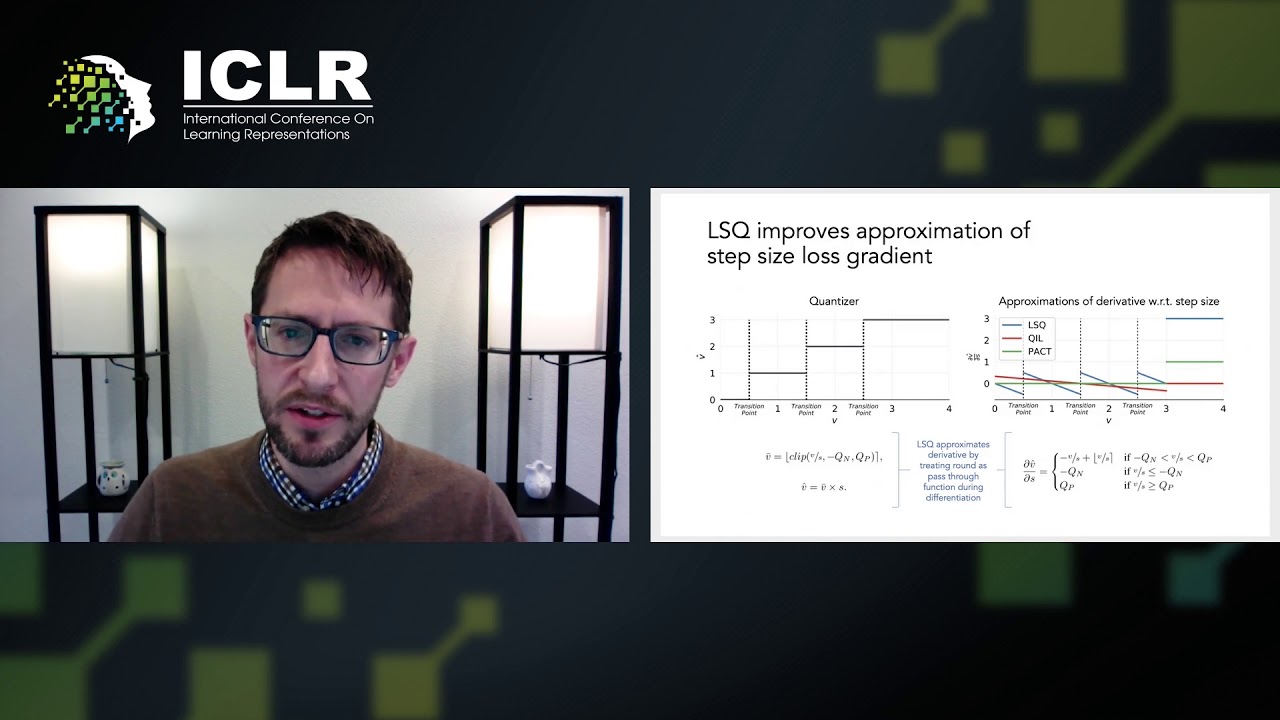

The researchers achieved this level of energy efficiency using a new process called “learned step size quantization,” which improves parameter change estimates in a low-precision network during training, to produce better performance. The research also uncovered evidence that AI systems seeking to optimize performance on a given system might run with as few as 2 bits. This advance means AI systems are steadily coming closer to the low levels of energy consumed by the human brain, while maintaining performance.