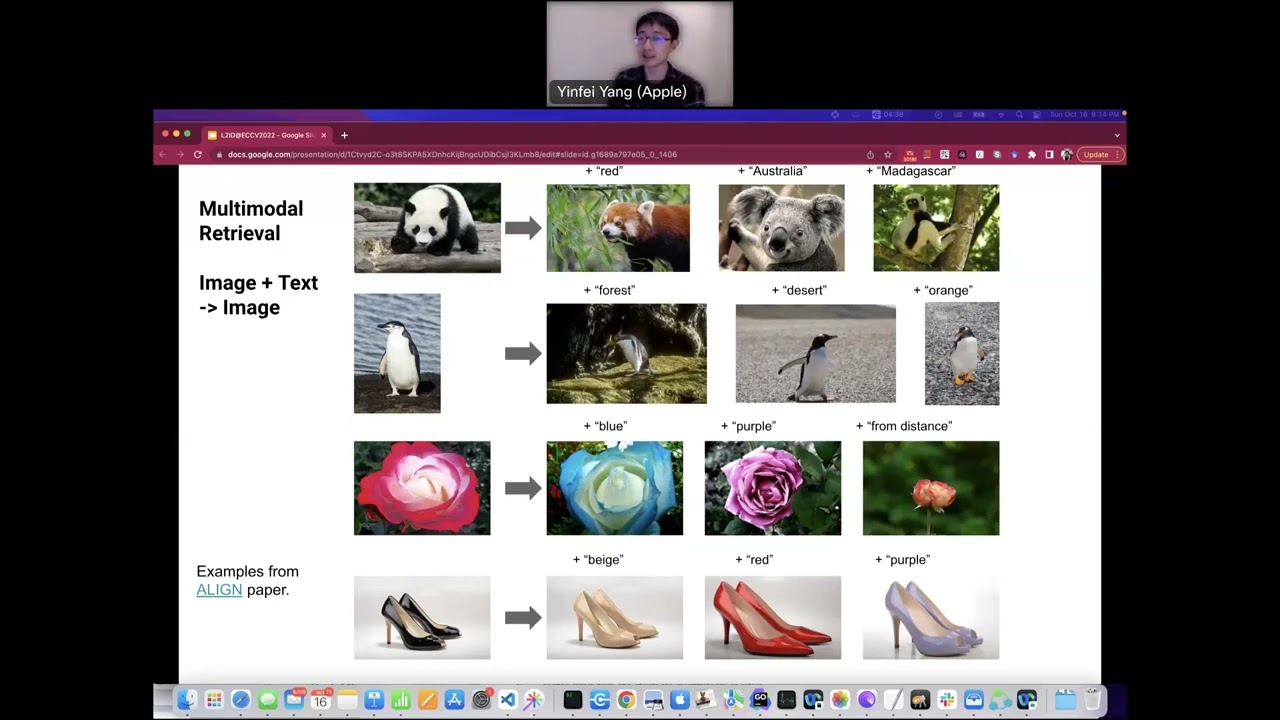

Abstract: Pre-training has become a key tool in state-of-the-art computer vision and language machine learning models. The benefits of very large-scale supervised pre-training were first demonstrated by By Bert in the language community, and BiT and ViT models in the vision community. However, popular vision-language datasets like Conceptual Captions, MSCOCO usually involve a non-trivial data collection and cleaning process, which limits the size of datasets and hence limits the large scale training of image-text models. In recent work, researchers leverage the noisy dataset of over billions of image alt-text pairs mined from the Web as pre-training. The resulting models have shown incredible performance on various visual and vision language tasks, including image-text retrieval, captioning, visual question answering et.c. In addition, researchers also show that the visual representations learned from noise text supervision achieves the state-of-the-art level results on various vision tasks including image classification, semantic segmentation, object detection et.c.

Bio: Yinfei Yang is a research manager at Apple AI/ML working on general visual intelligence. Previously he was a staff research scientist at Google research working on various NLP and Computer Vision problems. Before Google, He worked at Redfin and Amazon as research engineers for machine learning and computer vision problems. Prior to that, He was a graduate student in computer science at UPenn. He received his master's in computer science. His research focuses on image and text representation learning for retrieval and transferring tasks. He is generally interested in problems in Computer Vision, Natural Language Processing, or combined.

![[Insane] Public Belching Compilation](https://i.ytimg.com/vi/SEQE4QAmjB0/mqdefault.jpg)

![AKUSTTEKKER - HARDTEKK MIX [185 BPM]](https://i.ytimg.com/vi/wQd7a8992n0/mqdefault.jpg)