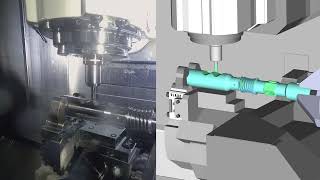

New vision sensors, such as the Dynamic and Active-pixel Vision sensor (DAVIS), incorporate a conventional camera and an event-based sensor in the same pixel array. These sensors have great potential for robotics because they allow us to combine the benefits of conventional cameras with those of event-based sensors: low latency, high temporal resolution, and high dynamic range. However, new algorithms are required to exploit the sensor characteristics and cope with its unconventional output, which consists of a stream of asynchronous brightness changes (called “events”) and synchronous grayscale frames. In this paper, we present a low latency visual odometry algorithm for the DAVIS sensor using event-based feature tracks. Features are first detected in the grayscale frames and then tracked asynchronously using the stream of events. The features are then fed to an event-based visual odometry algorithm that tightly interleaves robust pose optimization and probabilistic mapping. We show that our method successfully tracks the 6-DOF motion of the sensor in natural scenes. This is the first work on event-based visual odometry with the DAVIS sensor using feature tracks.

Reference: Beat Kueng, Elias Mueggler, Guillermo Gallego, Davide Scaramuzza, "Low-Latency Visual Odometry using Event-based Feature Tracks," IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Korea, 2016.

[ Ссылка ]

Our research page on event based vision:

[ Ссылка ]

For event-camera datasets and event camera simulator, see here: [ Ссылка ]

Robotics and Perception Group, University of Zurich, 2016

[ Ссылка ]

![Futuristic Cities - SCI-FI Designed cities [AI Generated Images] [AI Image Generator]](https://s2.save4k.org/pic/hf-XSeSxdrk/mqdefault.jpg)

![Как работает Клавиатура? [Branch Education на русском]](https://s2.save4k.org/pic/xCiFRXbJTo4/mqdefault.jpg)