➡️ Get Life-time Access to the complete scripts (and future improvements): [ Ссылка ]

➡️ Runpod one-click fine-tuning template (affiliate link, supports Trelis' channel): [ Ссылка ] (see [ Ссылка ] for full setup)

➡️ Trelis Livestreams: Thursdays 5 pm Irish time on YouTube and X.

➡️ Newsletter: [ Ссылка ]

➡️ Resources/Support/Discord: [ Ссылка ]

VIDEO RESOURCES:

- Slides: [ Ссылка ]

- Unsloth GitHub: [ Ссылка ]

- Dataset: [ Ссылка ]

TIMESTAMPS:

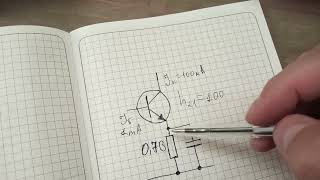

0:00 Comparing full fine-tuning and LoRA fine tuning

1:57 Video Overview

3:53 Comparing VRAM, Training Time + Quality

8:42 How full fine-tuning works

9:03 How LoRA works

10:35 How QLoRA works

12:45 How to choose learning rate, rank and alpha

20:13 Choosing hyper parameters for Mistral 7B fine-tuning

21:39 Specific tips for QLoRA, regularization and adapter merging.

26:16 Tips for using Unsloth

27:46 LoftQ - LoRA aware quantisation

30:39 Step by step TinyLlama QLoRA

47:05 Mistral 7B Fine-tuning Results Comparison

52:29 Wrap up

![Гелертер верят - Развитая цивилизация существовала до появления людей? [Времени не существует]](https://s2.save4k.org/pic/pMxzC99_ZkE/mqdefault.jpg)