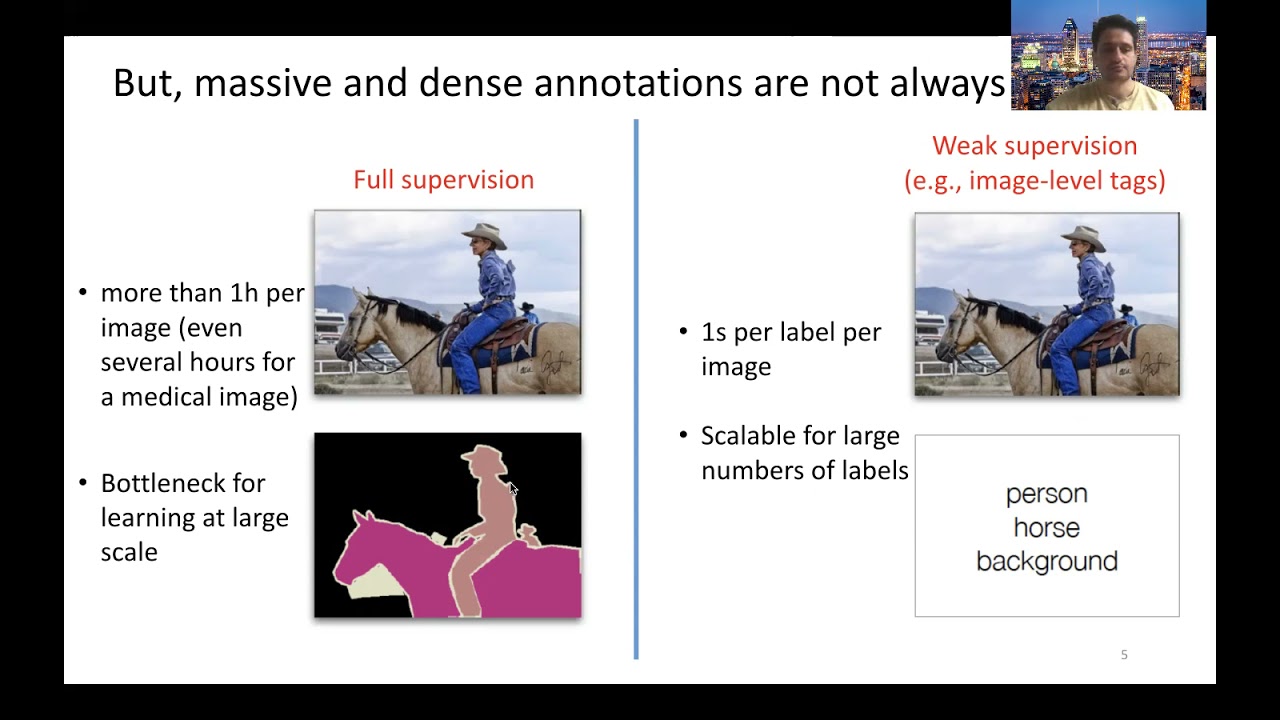

Weakly- and semi-supervised learning methods, which do not require full annotations and scale up to large problems and data sets, are currently attracting substantial research interest in both the CVPR and MICCAI communities. The general purpose of these methods is to mitigate the lack of annotations by leveraging unlabeled data with priors, either knowledge-driven (e.g., anatomy priors) or data-driven (e.g., domain adversarial priors). For instance, semi-supervision uses both labeled and unlabeled samples, weak supervision uses uncertain (noisy) labels, and domain adaptation attempts to generalize the representations learned by CNNs across different domains (e.g., different modalities or imaging protocols). In semantic segmentation, a large body of very recent works focused on training deep CNNs with very limited and/or weak annotations, for instance, scribbles, image level tags, bounding boxes, points, or annotations limited to a single domain of the task (e.g., a single imaging protocol). Several of these works showed that adding specific priors in the form of unsupervised loss terms can achieve outstanding performances, close to full-supervision results, but using only fractions of the ground-truth labels.

This presentation overviews very recent developments in weakly supervised CNN segmentation. More specifically, we will discuss several recent state-of-the-art models, and connect them from the perspective of imposing priors on the representations learned by deep networks. First, we will detail the loss functions driving these models, including, among others, knowledge-driven functions (e.g., anatomy, shapes, or conditional random field losses), as well as commonly used knowledge and data-driven priors. Then, we will discuss several possible optimization strategies for each of these losses, and emphasize the importance of optimization choice.

![Rammstein - Engel (instrumental cover) [live version] PITCHED](https://i.ytimg.com/vi/_L0hIFsy7kM/mqdefault.jpg)

![Amva sangma ft. Bilrak sangma[ Garo cover video] New Garo song [ Garo funny video]](https://i.ytimg.com/vi/eIhKmx8dHm4/mqdefault.jpg)