Patreon : [ Ссылка ]

Advanced in monocular depth estimation through supervised and self-supervised learning makes it possible to infer a semantically coherent depth map. However monocular depth estimation still lacks some features for practical embedded robotics applications. In such constraints scenarios, the scale and shift ambiguity, the computation requirements and the lack of temporal stability are some of the barriers to production. In this talk, I introduce ongoing work to include spatio-temporal inductive bias in the models. I first introduce how the flexibility of transformers architecture could improve models efficiency at run time. Then, I take a quick tour of how we can leverage multi-view geometry through optical expansion and depth from motion to solve the inherent scale ambiguity of monocular models.

00:00 Into

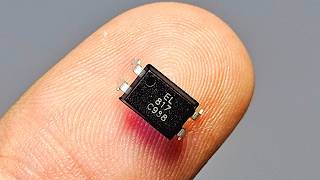

02:35 Hardware

03:13 Mammal perception

06:54 State of the art

10:00 Opportunities

11:00 Guided Attention

14:50 Efficient depth refinement

17:30 Recurrent formulation

22:00 Intermediate Summary

23:15 Optical expansion

24:40 Motion parallax

26:10 Solving scale ambiguity

27:00 Conclusion

28:00 Questions

[Chronique d'une IA]

Spotify : [ Ссылка ]

Amazon music : [ Ссылка ]

Apple Podcasts: [ Ссылка ]

[About me]

Visual Behavior : [ Ссылка ]

Perso : [ Ссылка ]

Github : [ Ссылка ]

Linkedin : [ Ссылка ]

Twitter : [ Ссылка ]