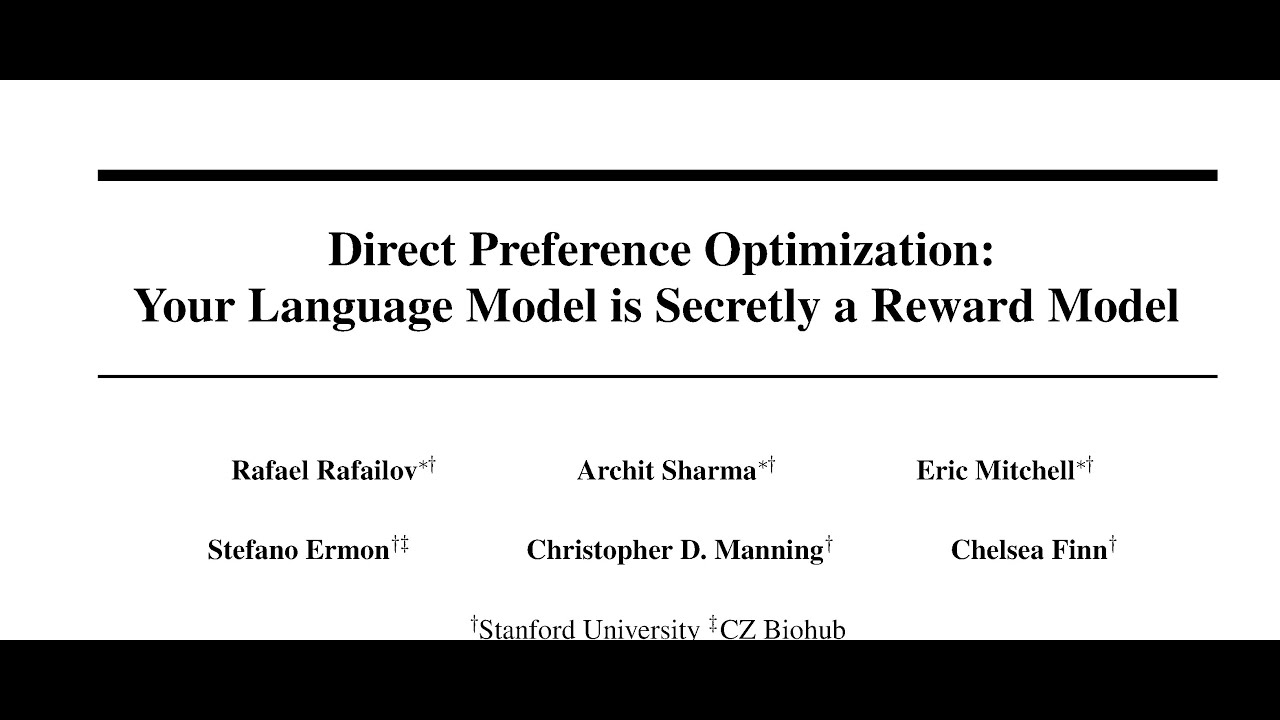

The paper introduces a new method called Direct Preference Optimization (DPO) for fine-tuning large-scale unsupervised language models (LMs) to align with human preferences. DPO is stable, performant, and computationally lightweight, and achieves better control of sentiment and improved response quality compared to existing methods.

[ Ссылка ]

YouTube: [ Ссылка ]

TikTok: [ Ссылка ]

Apple Podcasts: [ Ссылка ]

Spotify: [ Ссылка ]